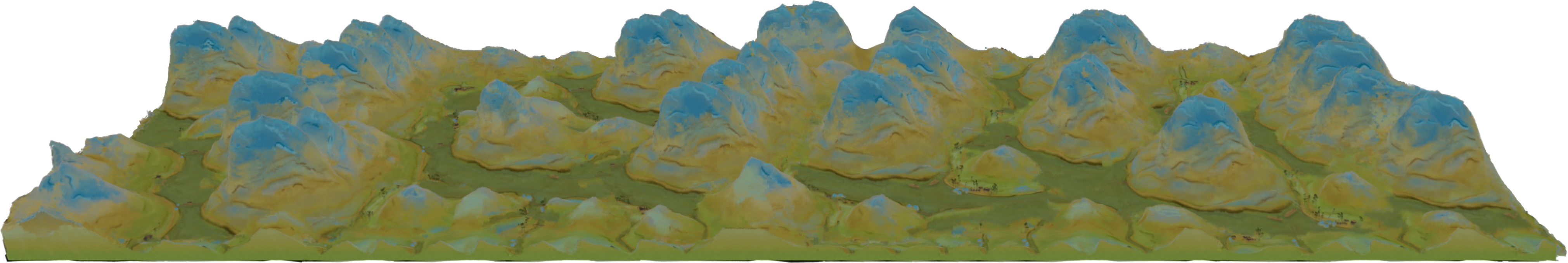

Higher-resolution Generation

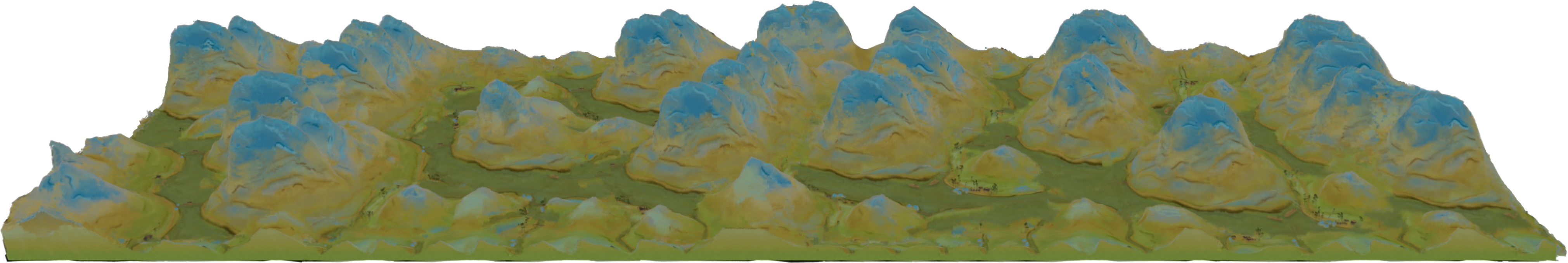

A novel ”A Thousand Li of Rivers and Mountains” is rendered from a generated 3D sample. The resolution of the scene is 1328 × 512 × 200, and image resolution is 4096 × 1024.

High-quality 3D scenes created by our method (background sky post-added)

We target a 3D generative model for general natural scenes that are typically unique and intricate. Lacking the necessary volumes of training data, along with the difficulties of having ad hoc designs in presence of varying scene characteristics, renders existing setups intractable. Inspired by classical patch-based image models, we advocate for synthesizing 3D scenes at the patch level, given a single example. At the core of this work lies important algorithmic designs w.r.t the scene representation and generative patch nearest-neighbor module, that address unique challenges arising from lifting classical 2D patch-based framework to 3D generation. These design choices, on a collective level, contribute to a robust, effective, and efficient model that can generate high-quality general natural scenes with both realistic geometric structure and visual appearance, in large quantities and varieties, as demonstrated upon a variety of exemplar scenes.

* refresh is recommended when the videos are asynchronous.

A novel ”A Thousand Li of Rivers and Mountains” is rendered from a generated 3D sample. The resolution of the scene is 1328 × 512 × 200, and image resolution is 4096 × 1024.

Users can manipulate on a 3D proxy, which can be the underlining mapping field or mesh, for editing scenes, such as removal, duplication, and modification.

Our method can resize a 3D scene to a target size while maintaining the local patches in the exemplar.

Given two scenes A and B, we create a scene with the patch distribution of A, but which is structurally aligned with B.

With the coordinate-based representation, we can re-decorate the generated ones with ease, via simply remapping to exemplars of different appearance.

Samples generated with images collected from a real-world scenic site – Bryce canyon ©2022 Google. Notably, we only synthesize the region of interest (i.e., the odd rocks) and the background is separately modeled with an independent implicit neural network.

@article{weiyu23Sin3DGen,

author = {Weiyu Li and Xuelin Chen and Jue Wang and Baoquan Chen},

title = {Patch-based 3D Natural Scene Generation from a Single Example},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023},

}